POP

Nov 2020 - Apr 2021

The climate emergency is the greatest threat to our planet this century. Key to solving this crisis is the circular economy: a way for us to re-think how we use products and services. Critical to circularity is the idea of repairing products, rather than throwing them away.

But today, repair is too slow and difficult to be affordable in most cases. Millions of electronics products are thrown away each year when they fail - likely just due to a single component breaking. POP is a new type of tool that makes repair as easy as taking a photograph. Within seconds, repairers can now diagnose complex problems with circuit boards, drawing upon the wisdom of the crowd to save both time and money for consumers. Once they know the problem, they can order the replacement part with the tap of a button.

POP uses thermal imaging technology to effortlessly compare a broken board to a known-good image, allowing it to accurately diagnose a variety of different electronics issues in a way that is sensitive to the context of the board.

Without POP, diagnosing electronics issues is a challenging and often time-consuming process.

FieldTrack

2020 - 2021

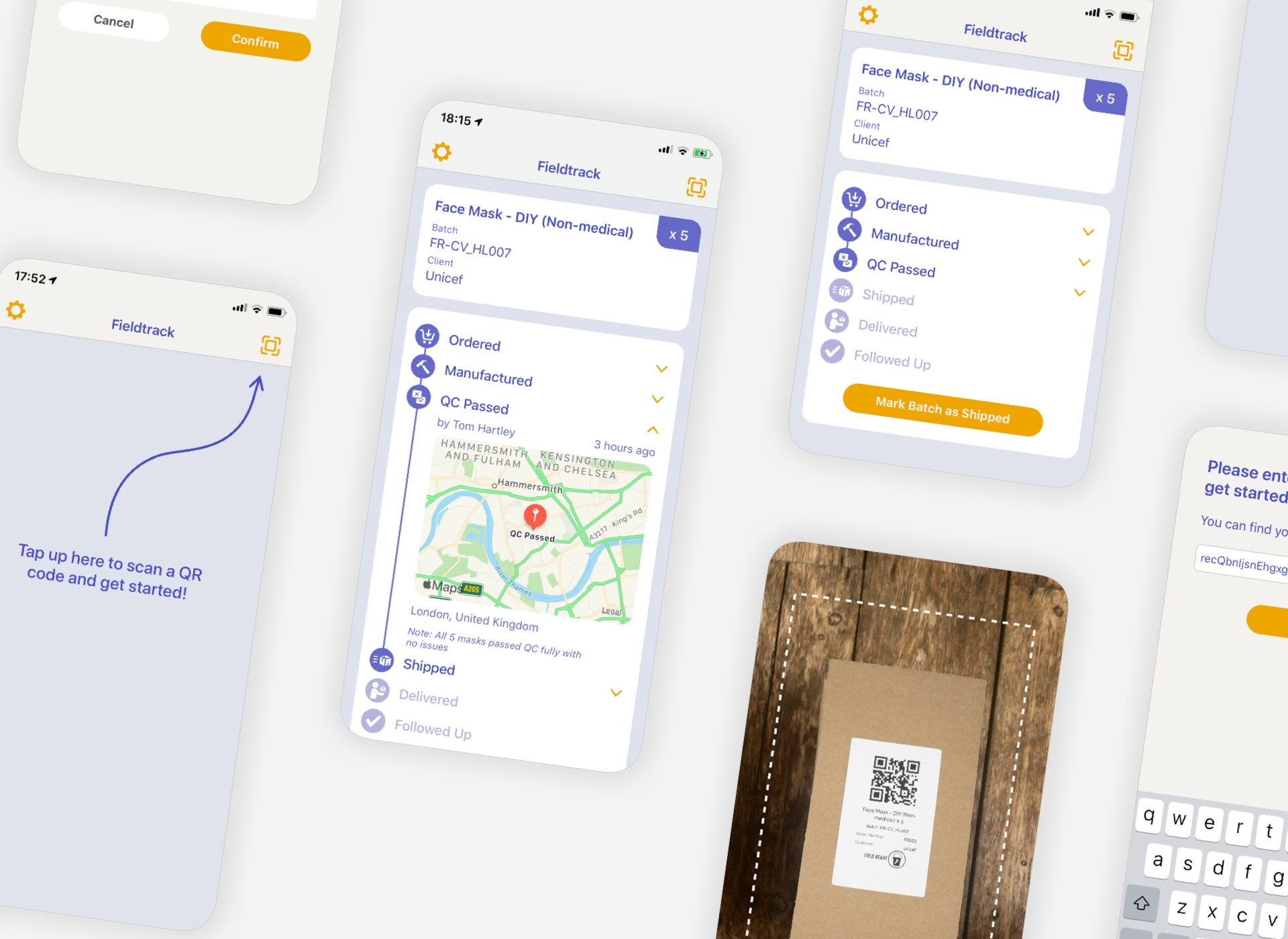

FieldTrack is an internal tool created for Field Ready, a global NGO operating in more than 10 countries worldwide pushing for greater adoption of localised manufacturing.

Working in a three person team, we were funded to tackle the challenge of labelling, tracking and monitoring products across a distributed supply chain. Field Ready’s infrastructure requirements meant that a custom system would likely be hard to maintain and extend in the long-run, so we designed a system that operated with as many off the shelf components as possible, with a front-end app tying them together and acting as the main interface into the system that allowed the tracking and monitoring of all products manufactured and distributed by Field Ready.

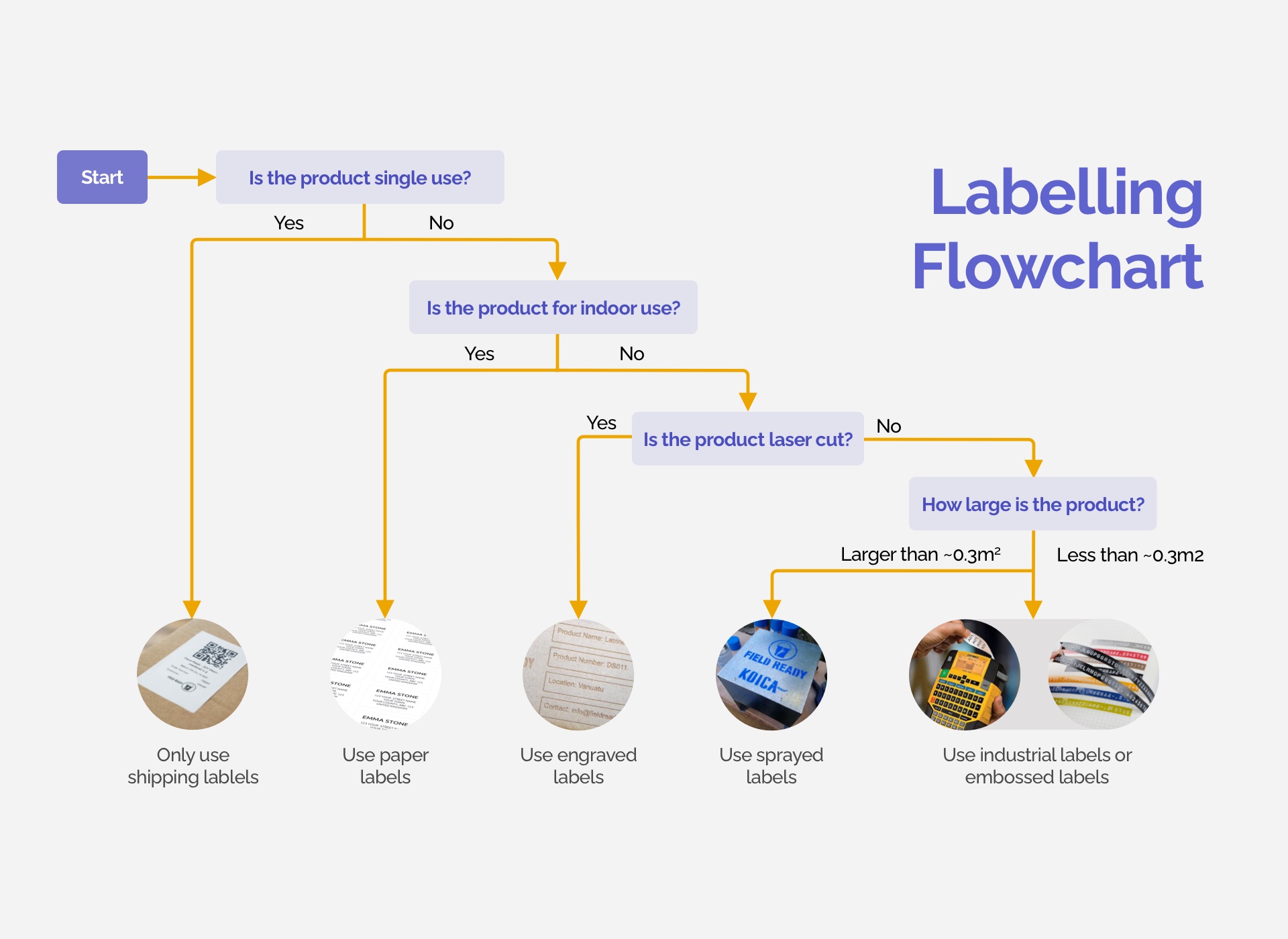

Rather than using a single labelling technology to label all of Field Ready's products, we proposed a variety of technologies to be used depending on the material, lifetime and size of the product. They all displayed a consistent product number.

Sony D&AD

July 2019

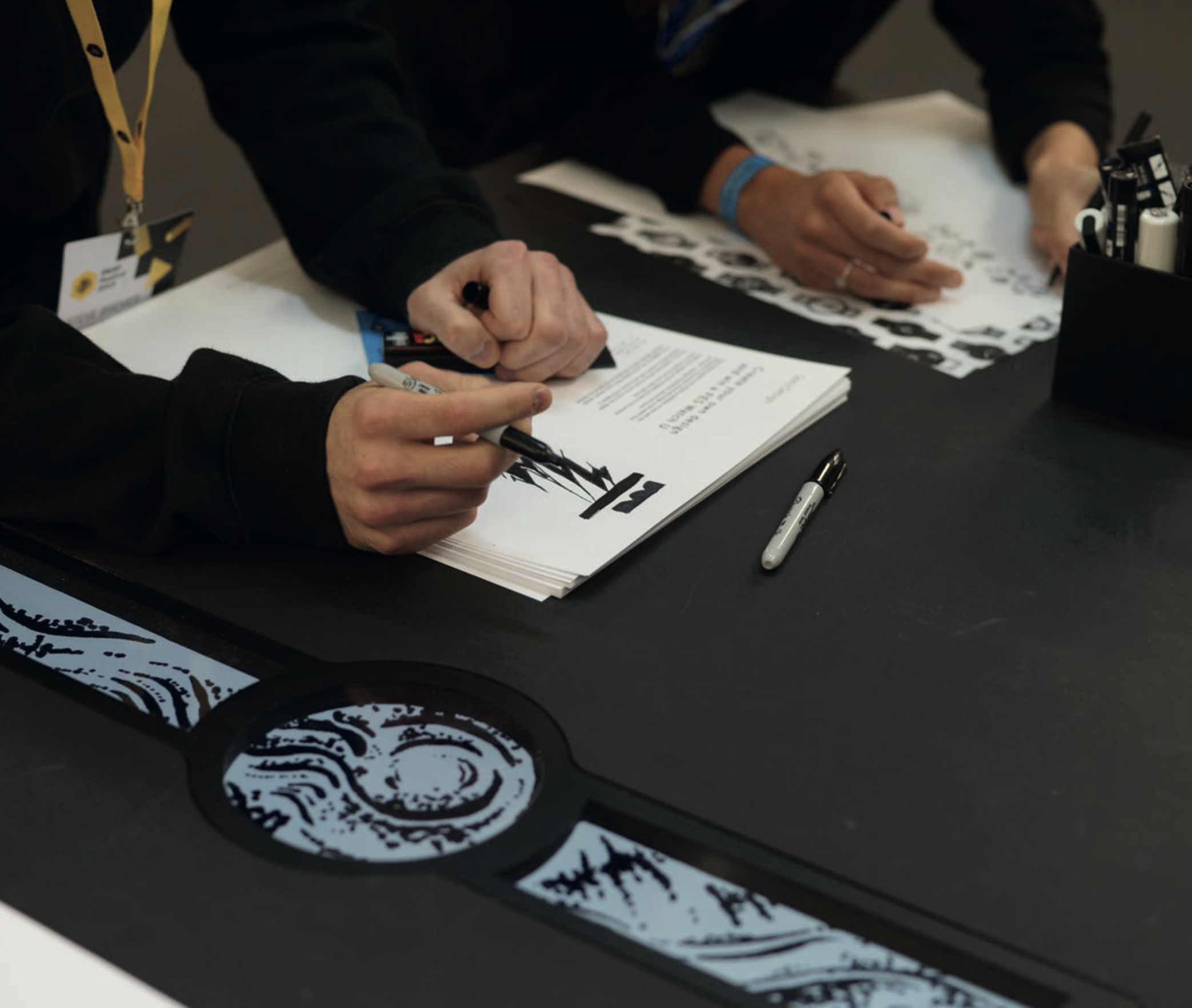

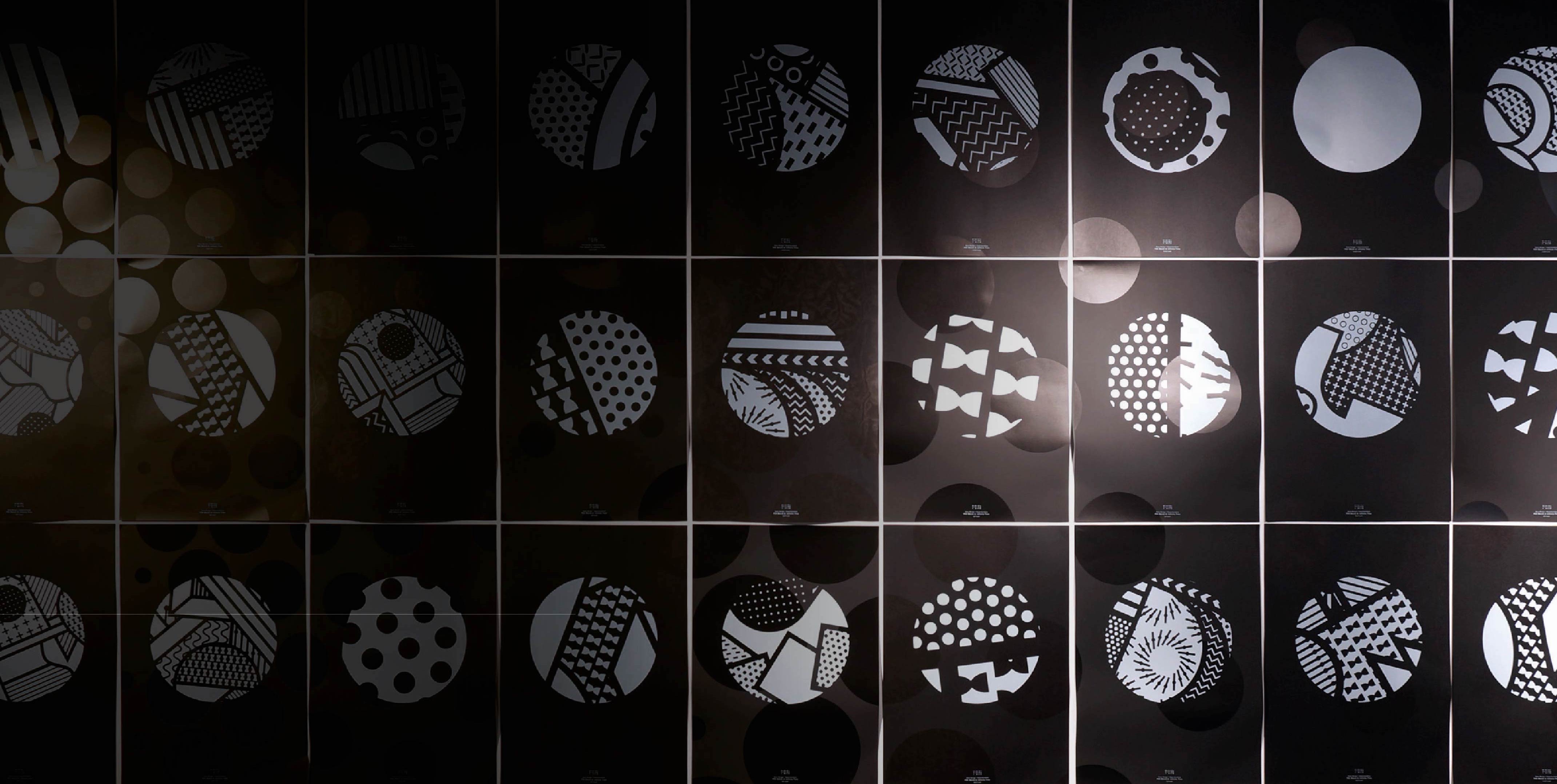

As part of a campaign for a new customisable watch, Sony Europe were looking to increase engagement at a trade show stand. I worked with their team to design and implement two engaging interactive components of the stand. The first of these drew attention to the watch’s full-size e-paper screen by automatically showing a poster of the user’s choice on a large-scale model of the watch. The other allowed people to create their own designs and scan them into the system to be shown on another large watch. These interactions led to a high volume of social engagement across the event, with visitors sharing photos of their watch-face sketches online. Both interactions were implemented using custom computer-vision software.

Tuonge

April 2019

Access to sexual and reproductive health (SRH) information in Kenya is limited. In this collaborative project, we adopted a co-design approach to support parents when teaching their children these topics. Through interviews, we identified that children trusted parents as a source of information, but that they didn’t necessarily have the tools they needed to answer questions.

With limited smartphone penetration in the target demographics, we designed a system using the feature-phone-supported USSD protocol that allowed parents to quickly and easily gather information to teach their children about SRH matters, curated by gender, age and subject. After testing with users and refining the app, we then launched the service through a feature on a community radio station.

Ataraxia

November 2018

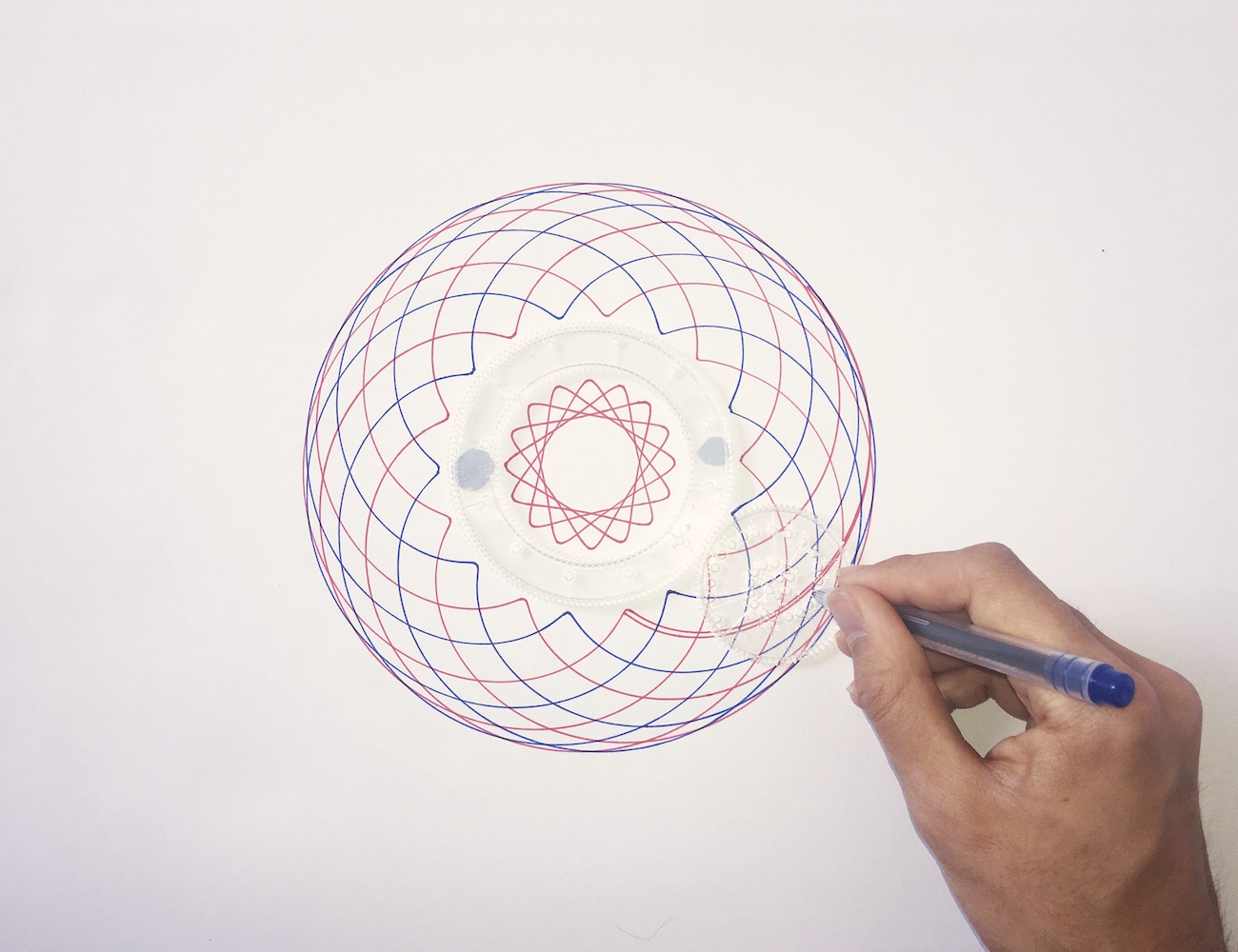

Inspired by the ancient greek word for ‘tranquility’, Ataraxia is a tool for passive introspection and relaxation. As if by magic, a small sphere quietly inscribes patterns across its sand-covered surface. Beneath the surface, a custom-designed mechanism sits hidden within a tulip-wood case. The materials and colours chosen for the product took direction from the seaside. Ataraxia allowed me to develop my appreciation for minimal mechanisms fused with careful craftsmanship.

The inspiration for the patterns generated by Ataraxia was the spirograph - a simple children's toy.

Spacescape

June 2018

Spacescape was a response to an Imperial College brief inviting playful and creative submissions for a new space the university was opening. I was part of a team of 4 that was funded to design and build this single-person portable escape room, themed around escaping a spaceship. I took responsibility for the design of the room, including the visual design, storytelling, usability and playtesting. Key challenges included integrating the design of the room with the storytelling of the game, as well as creating an immersive experience on a limited budget. Spacescape was additionally exhibited at Electromagnetic Field 2018.

The escape room was constructed in two parts which could be easily transported to the festival, before being reassembled onsite.

Built as a team of four, we collaborated closely to bring this project together.

Museum Alive - Augmented Reality in Museums

Jan – May 2018

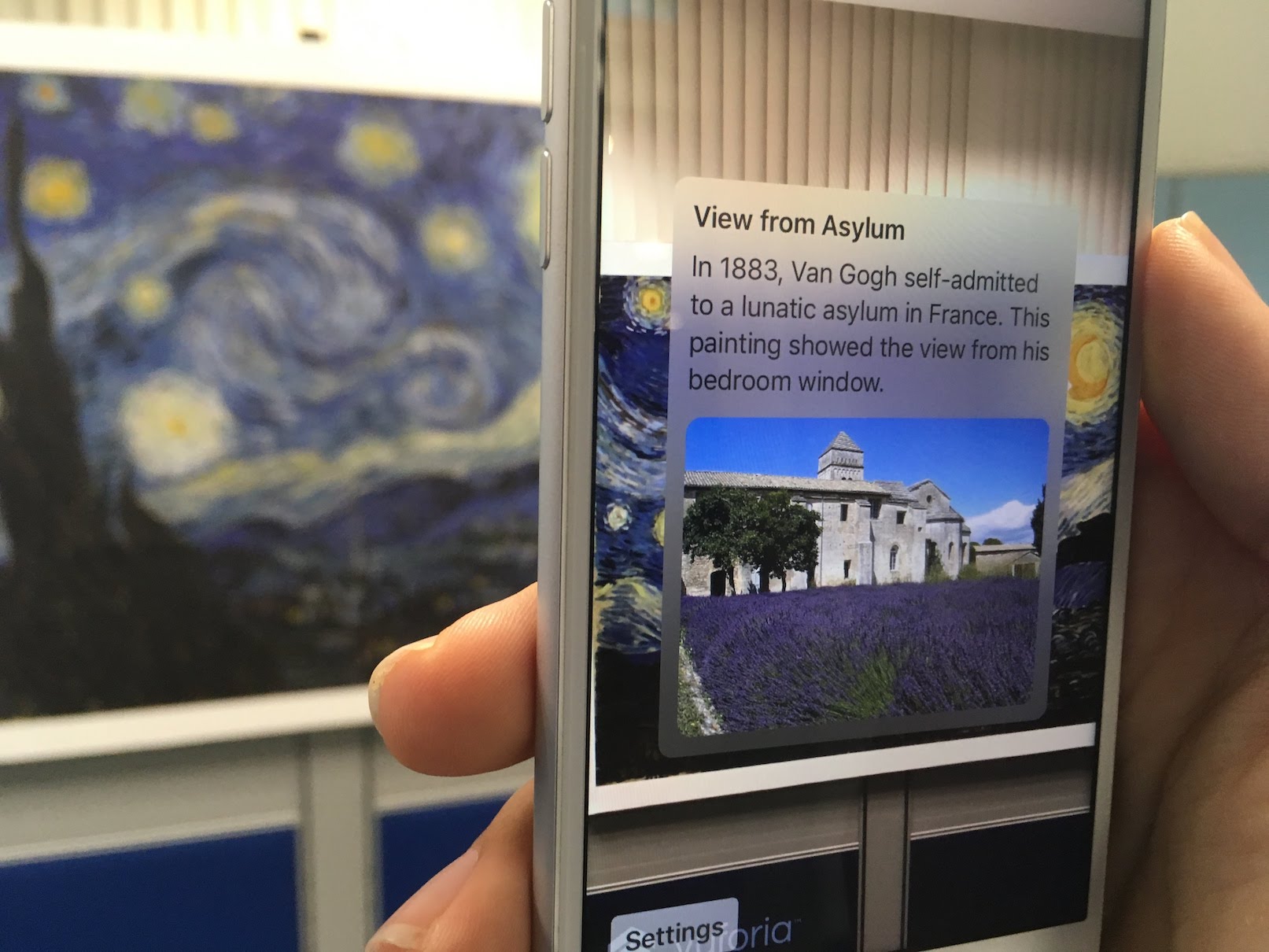

Visitor engagement at museums worldwide is falling. In 2018, I worked on a project in Singapore to design a new way for young visitors to interact with spaces. Through interviews and research, I realised that much of the context surrounding artworks is lost - buried within wordy textbooks or complex captions. What does that symbol mean? What was the artist trying to show here? Who is that person?

Museum Alive is an app that helps visitors connect with the artwork. Through augmented reality, viewers are shown curator-provided annotations over the artwork, which they can click on to read more detail. They can also add their own insights and learnings from the work, which will be verified and then added in real-time.

Continuum

March – July 2017

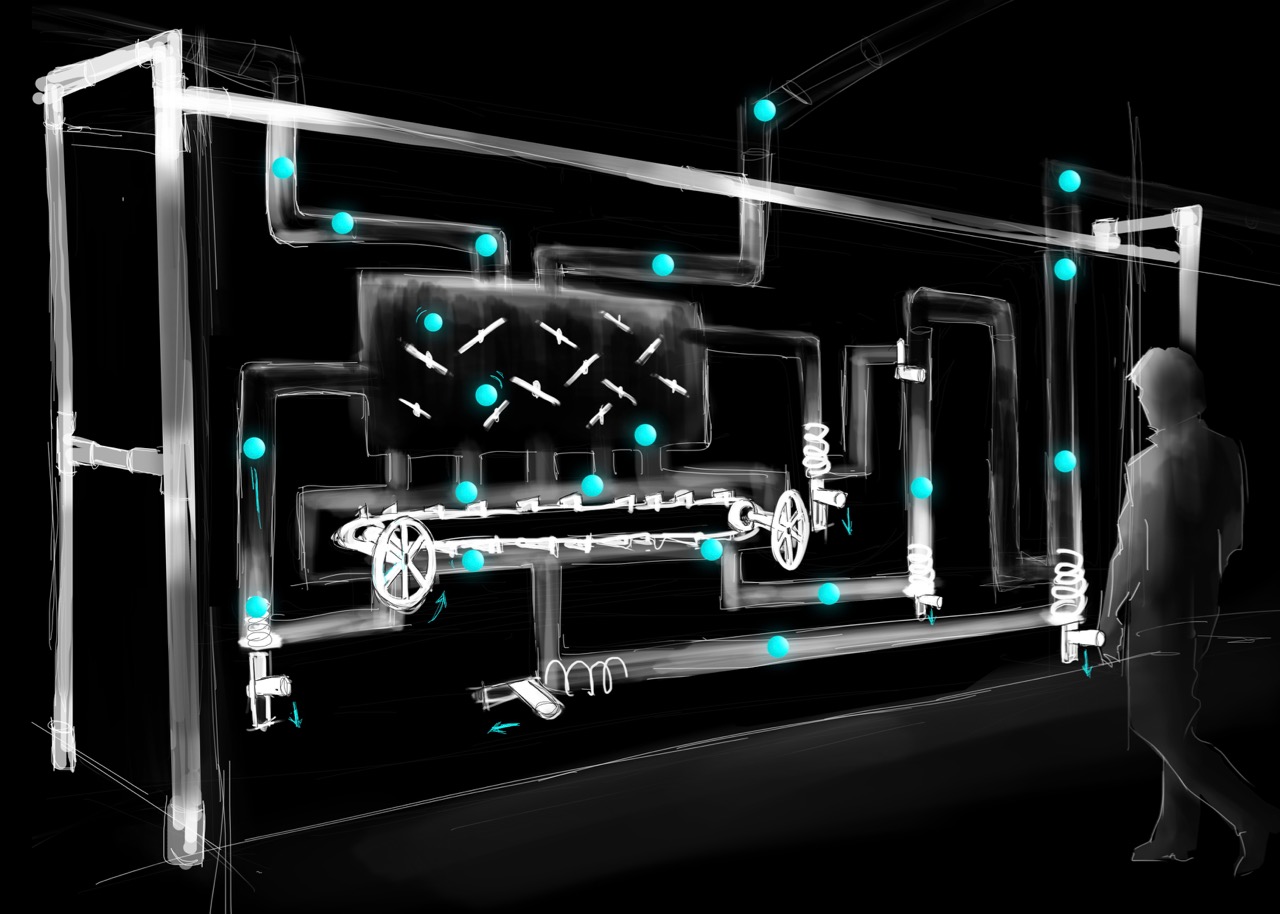

I was part of a team invited by the Imperial College Advanced Hackspace to create an interactive installation for the flagship Imperial Festival. With a brief asking us to represent ‘ideation’ while engaging audiences of all ages, we created seven interactive machines across a 1600 sqft space to engage visitors. I lead the design and development of one of these machines, managing a team to prototype, build and test it in-situ on a tight timeframe.

Visitors were invited to customise and pop small balls into the machine whereupon they were transported, painted, mixed and propelled through the maze of connected machinery. Continuum entertained more than 4000 children and adults of across one weekend, and was also invited to be shown at the Victoria and Albert Museum.

Over 4000 people experienced Continuum during the two days the exhibition was open.

A sketch of the machine, made during the concept phase of the project. Credit: Larasati

Putting the finishing touches on the project before it went on show.

The complete design as it was shown in the installation.

Many children enjoyed interacting with the machines.

Re~master

May - July 2017

Re~master is the result of an ambitious collaboration with designer Sabina Weiss. We worked together to create a new way of interacting with digital fabrication tools that still allows for rapid exploration, discovery and learning in a non-technical environment.

Whilst traditional embroidery is a hand based activity, the transition to digital embroidery machines brought with it opaque and cumbersome computer driven tools. We left those behind and developed an interface that allows makers to simply sketch their desired output and realise it in embroidery, allowing users to feel ownership and empowerment of the process through a human-machine collaboration.

Remaster has been shown at the Victoria and Albert Museum as well as the Barbican Center.

Fish-o-Tron

June 2015

This work was a collaboration with engineer Fu Yong Quah. We worked together under time-pressure to design and prototype a low-cost system for automating a slow manual process in the fishing industry through the use of computer vision.

Measuring the size of fish is critical to the industry, as fish sizes are used to determine the health of fish stocks - and as such, if this data is not collected it can be hard to sustainably manage fisheries across the world. We introduced a low-cost tool for recording the length of fish using only a laminated sheet of printed paper and a $30 smartphone as a camera. The system used markers printed on the paper that could be used by the phone to automatically size the fish and determine its length.

Fish-o-Tron won the global prize for the 2015 Fishackathon (supported by the US Department of State), beating over 150 other projects.

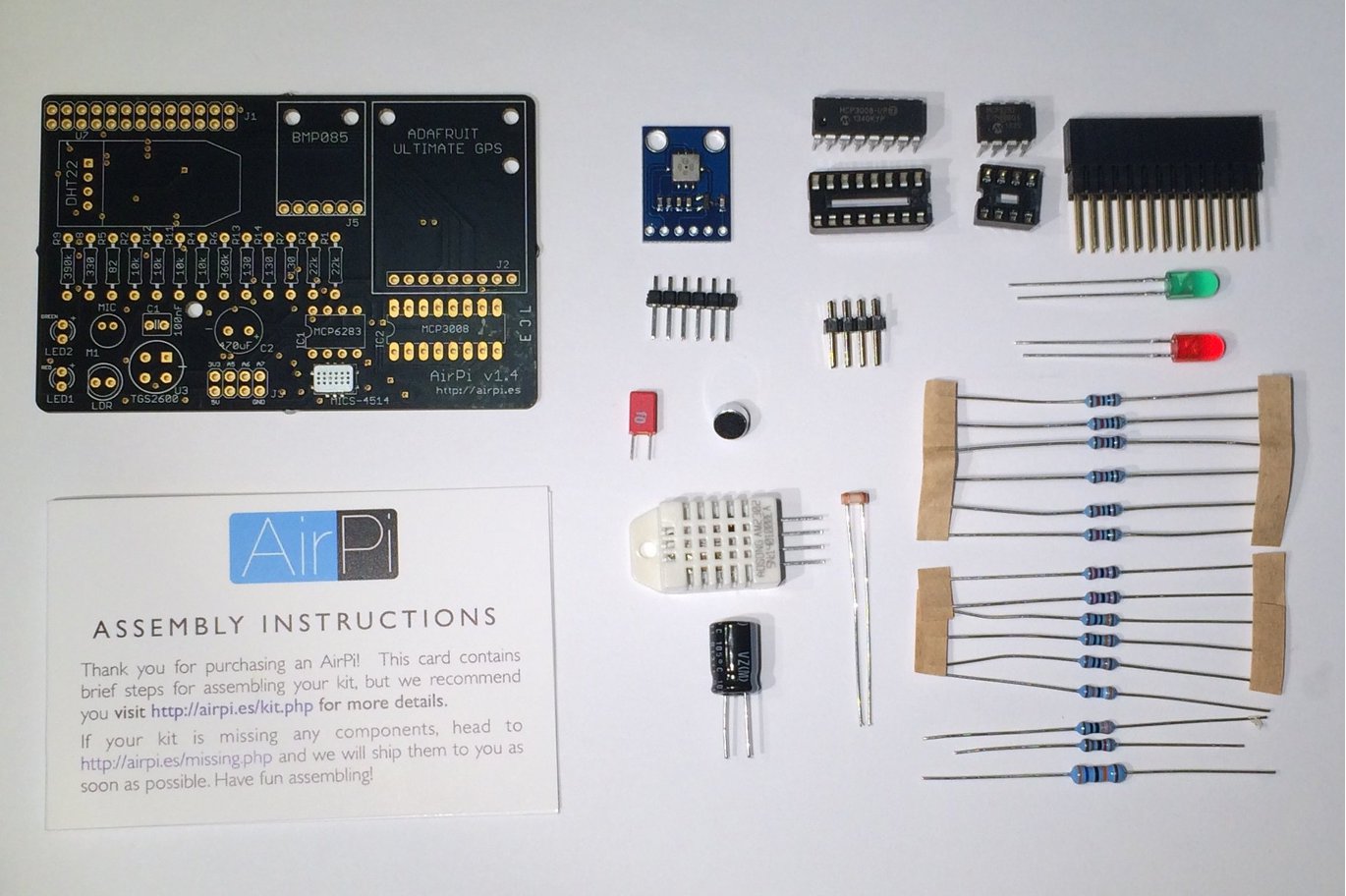

AirPi

2012 - 2014

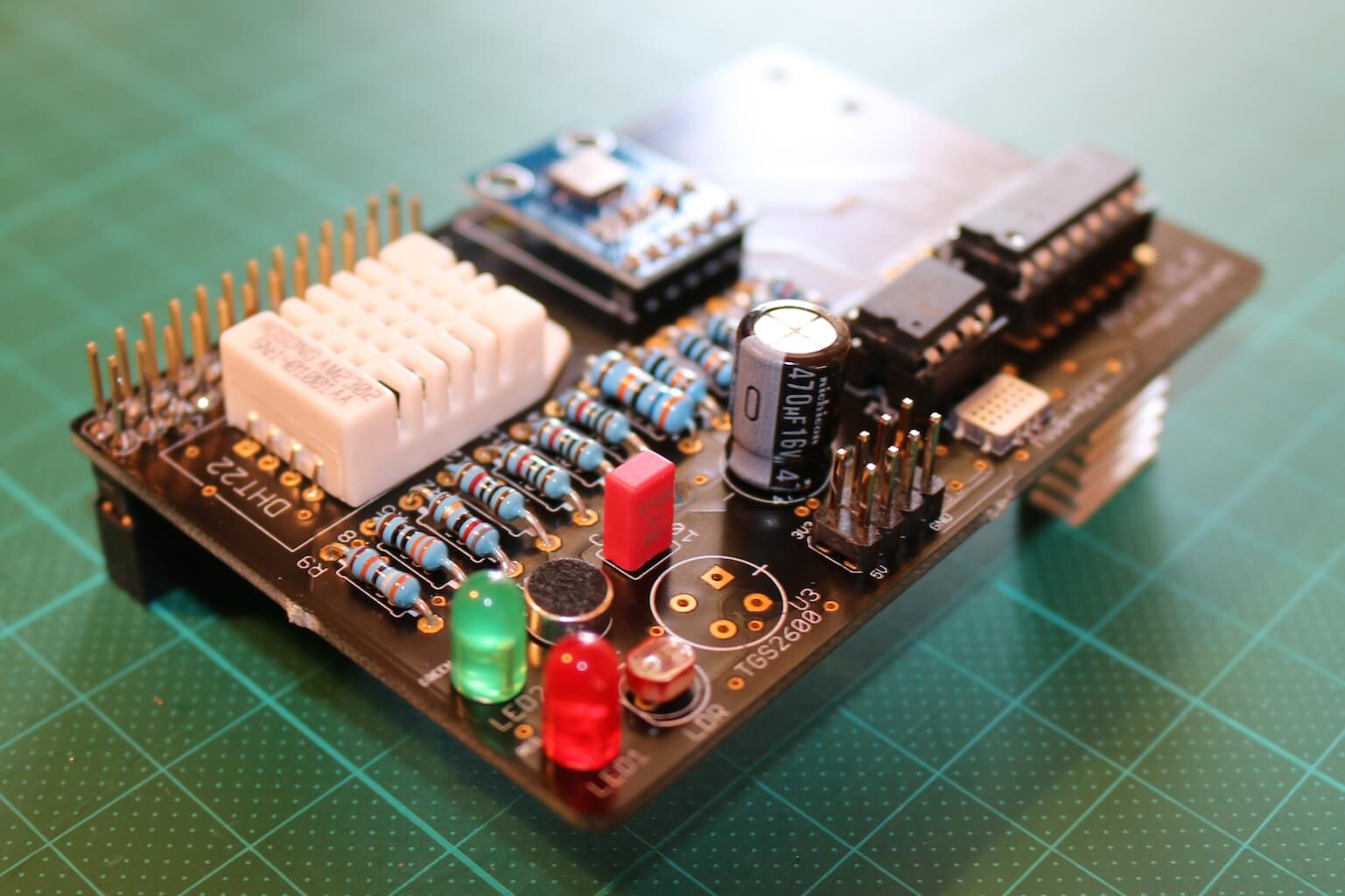

In 2012 I co-founded a startup to commercialise a concept for affordable community-based air quality and weather monitoring. Traditional IoT solutions for measurement are expensive and unwieldy, meaning that data on air quality is sparse in cities and often unavailable outside.

AirPi took a different approach. By using low cost sensors we brought the cost of a system down to under $100, making it more accessible. We sold over 1000 devices to countries as far and wide as China and Argentina. Many were purchased by groups to help educate children, including the German ChaosComputerClub who ran a workshop with them to educate local teenagers, and Vietnam’s Green Youth Collective who used them to measure air quality in a city which didn’t have government provided monitoring.

Winner of PA Consulting Raspberry Pi Competition

SmartMove

June 2012

Househunting is hard - and more challenging still can be deciding which part of a city to live in. With so many variables to compare it can be overwhelming, so we designed SmartMove as a way to narrow down the choices. By picking what matters to you - from good schools to affordable housing, we generate and display a customised heatmap displaying which part of London is best for you.

You can then tap on a neighbourhood to learn more about it, as well as pick your criteria and have pins representing houses placed directly onto the map.

SmartMove was a collaboration with Ainsley Escorce-Jones. I worked on the design and front-end development, and Ainsley wrangled data and created the backend.

Winner of Best in Show at Young Rewired State